Llama-2-13b-chatggmlv3q4_0bin offloaded 4343 layers to GPU. Its likely that you can fine-tune the Llama 2-13B model using LoRA or QLoRA fine-tuning with a single consumer. What are the minimum hardware requirements to run the models on a local machine. For good results you should have at least 10GB VRAM at a minimum for the 7B model though you can sometimes see. A notebook on how to fine-tune the Llama 2 model with QLoRa TRL and Korean text classification dataset. What are the hardware SKU requirements for fine-tuning Llama pre-trained models. Discover how to run Llama 2 an advanced large language model on your own machine..

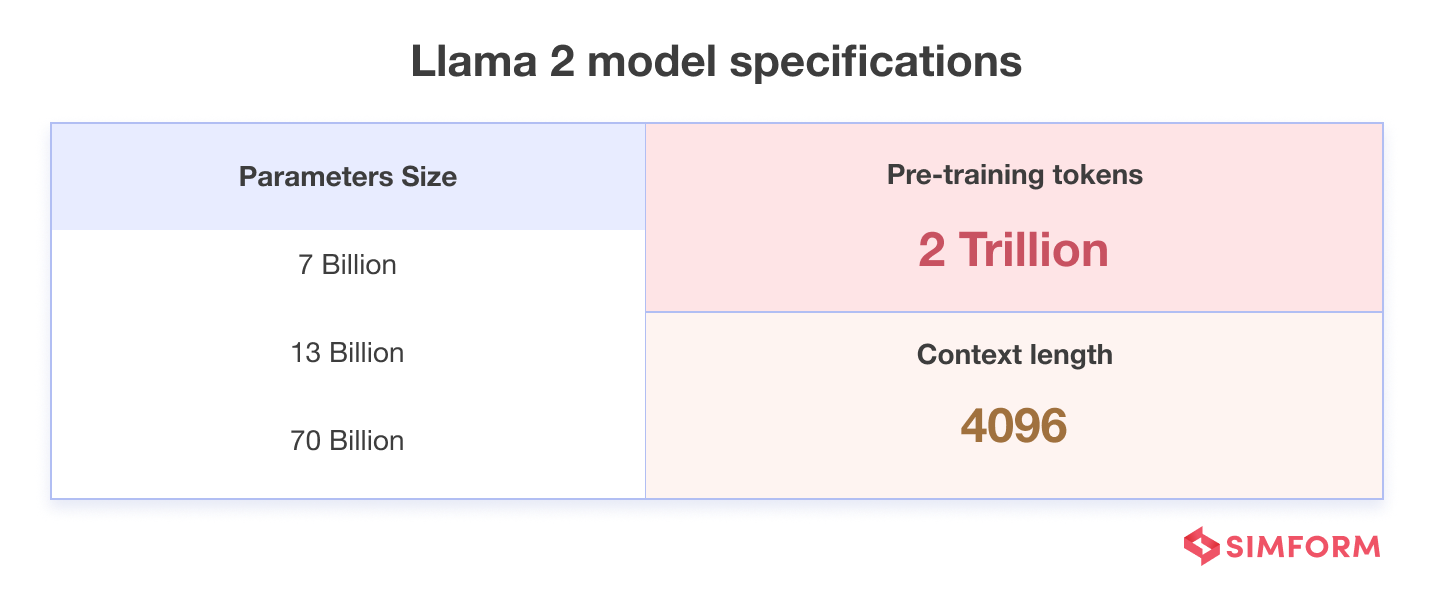

Medium balanced quality - prefer using Q4_K_M. . . Coupled with the release of Llama models and parameter-efficient techniques to fine-tune them LoRA. Llama 2 is a series of LLMs released by Meta ranging from 7B to 70B parameters Llama 2 serves as a foundational. Download Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. Please provide a detailed written description of what llamacpp did instead. ..

Llama-2-13b-chat-german is a variant of Metas Llama 2 13b Chat model finetuned on an additional dataset in German language This model is optimized for German text providing. Description This repo contains GGUF format model files for Florian Zimmermeisters Llama 2 13B German Assistant v4 About GGUF GGUF is a new format introduced by the llamacpp. Meet LeoLM the first open and commercially available German Foundation Language Model built on Llama-2 Our models extend Llama-2s capabilities into German through. Built on Llama-2 and trained on a large-scale high-quality German text corpus we present LeoLM-7B and 13B with LeoLM-70B on the horizon accompanied by a collection. Llama 2 13b strikes a balance Its more adept at grasping nuances compared to 7b and while its less cautious about potentially offending its still quite conservative..

. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7. Run Llama-2-13B-chat locally on your M1M2 Mac with GPU inference. Ollama is an open-source macOS app for Apple Silicon that lets you run create and share large. Running Llama 2 with gradio web UI on GPU or CPU from anywhere LinuxWindowsMac. The model llama-2-7b-chatggmlv3q4_0bin will be automatically downloaded. Download 3B ggml model here llama-213b-chatggmlv3q4_0bin Note Download takes a while due to..

Comments